Step 1 - Data, Step 2 - Baseline (no missing values), None

Exploring how the NIML system is affected by missing data, both during training and at inference

Missing data can be a common issue. Data scientists have to deal with this before they can send their data through a traditional machine learning system. Using a process called imputation, the data scientist can fill in missing values in their dataset. But there are lots questions that come up in the process. Among them:

- If an observation contains a missing value for one or more features, should the observation be discarded?

- If the same feature is frequently missing (e.g. say people skip the "income bracket" question), should the feature be dropped from the dataset?

- What if this feature is highly informative when the data is populated? Wouldn't it be nice to include the feature when it is present.

- Which imputation strategy should be used? Can/should multiple strategies be used?

- Doesn't data imputation introduce bias into the system? Can this be minimized?

Imputation adds a level of complexity on top of an already complex machine learning system. Even after choosing an imputation strategy, the data scientist may continue to second-guess whether the chosen strategy is sufficient. It would be incredibly convenient if a machine learning system could just operate on a dataset that has missing values.

The NIML system does not require data imputation.

The challenges for this week focus on exploring how NIML (and perhaps other systems) handle missing data:

- Modify your own dataset by injecting missing values and see how NIML responds

- Take a dataset with missing values and impute. Compare/contrast how NIML behaves on the missing-value dataset verses the imputed dataset

- Take a missing-values dataset and run it through NIML and also through another ML system and compare/contrast the results from the two systems

We challenge you to take the concepts here and apply them to a dataset of your choosing, and then explore beyond that!

Step 1 - DataFirst, we need to load in the dataset (we will use scikit-learn's wine dataset) and convert it into a form appropriate for NIML.

from sklearn import datasets from sklearn.model_selection import train_test_split import random random.seed(9411) # for repeatable results data_file = datasets.load_wine() niml_data = [] for idx in range(len(data_file.data)): row = [str(data_file.target[idx])] # column 0 = target label row.extend(data_file.data[idx]) # all other columns = feature values niml_data.append(row) random.shuffle(niml_data)

What does missing data look like in NIML?

Let's briefly look at what NIML does when data is missing. In last week's challenge, we used a function to plot encoded data. We will use that functions again this week. Here it is.

import matplotlib.pyplot as plt def plot_encoding(encodings, labels, sdr_width, num_features=None, title=""): colors = {'0': "red", '1': "blue", '2': "green"} plt.figure(figsize=(15,1)) # max(1, len(encodings)/10))) for idx, encoding in enumerate(encodings): label = labels[idx] xvals = [] yvals = [] for value in encoding: yvals.append(idx) xvals.append(value) plt.scatter(xvals, yvals, color=colors[label], marker='o') if num_features is not None: f_width = int(sdr_width / num_features) xpos = 0 while xpos <= sdr_width: plt.plot([xpos, xpos], [-1, len(encodings)+1], color="grey", linestyle="dotted") xpos += f_width plt.yticks(list(range(len(encodings)))) plt.ylim(-1, len(encodings)+1) plt.xlim(0, sdr_width) plt.title(title) plt.show()

Now let's create an encoder.

# encode the data by creating an encoder, configuring it, and then performing the encoding on train/test from niml.encoder import encoder nf = len(niml_data[0]) - 1 # get the number of features in the dataset my_encoder = encoder.Encoder(set_bits=5, sparsity=.5, field_types= ["N"] *nf, # all features are numeric cyclic_flags=[False]*nf, # none of the fields are cyclic spans= [ 0] *nf, # use simple/basic encoding bit-patterns cat_overlaps=[ 0] *nf, # N/A as data is numeric, not categorical. Set all features to 0 cat_values= [None] *nf, # N/A as data is numeric, not categorical. Set all features to None ) my_encoder.config_encoder(input_data=niml_data, label_col=0)

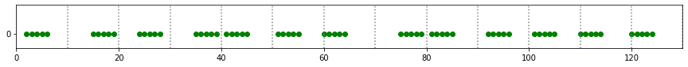

And now (for reference) we will encode a line of data from the wine dataset that has values for all features - nothing missing.

line_to_encode = niml_data[0] print("Data being encoded:\n ", line_to_encode) labels, encodings, sdr_width = my_encoder.encode(input_data=[line_to_encode], label_col=0) plot_encoding(encodings, labels, sdr_width, num_features=nf)

Data being encoded:

['2', 12.53, 5.51, 2.64, 25.0, 96.0, 1.79, 0.6, 0.63, 1.1, 5.0, 0.82, 1.69, 515.0]

Now - let's take that same line of data, and remove some of the values. We will replace them with the string "?" and we will tell the NIML system that a "?" is the character string that means "a value is missing"

After removing two features, let's encode this same line of data again and see the result.

import copy my_encoder.null_value = "?" # <------- One of two ways to tell the encoder what a MISSING VALUE looks like missing_values_line = copy.deepcopy(niml_data[0]) missing_values_line[3] = "?" # replace the 3rd feature with a "missing value" missing_values_line[9] = "?" # replace the 9th feature with a "missing value" print("Data being encoded:\n ", missing_values_line) labels, encodings, sdr_width = my_encoder.encode(input_data=[missing_values_line], label_col=0) plot_encoding(encodings, labels, sdr_width, num_features=nf)

Data being encoded:

['2', 12.53, 5.51, '?', 25.0, 96.0, 1.79, 0.6, 0.63, '?', 5.0, 0.82, 1.69, 515.0]

The two graphs above show what happens when NIML comes across a missing value in the dataset. The NIML encoder simply skips over that field. No encoding is generated for that feature. When this encoding is passed into the NIML model, the model processes the input without any regard to the fact that some of the encoding bits are absent.

The important concept to observe is that the NIML system has a sematic way to represent "there is no value here". This is a unique property not found in other systems. Rather than having to replace a missing value with some placeholder, the NIML system allows for missing values to have their own unique representation.

There is one unavoidable impact of missing values on the NIML system. When a missing-value observation is encoded and passed to the pooler component, the pooler has no "signal" to latch onto at those missing-value positions. So during the reinforcement portion of the algorithm, the reinforcement can only be performed at positions where values are present. The result would be the convergenge of the pooler (the learning) at some positions might slightly outpace the convergence at other positions.

Note - imputation is not evil!

I want to take a brief pause to point out here that data imputation is NOT necessarily a bad practice. To the contraray, it is incredibly useful - it has allowed strides to be made in machine learning. And the NIML system is completely capable of taking in a data file that has gone through imputation. In fact, an imputed data file may perform BETTER in the NIML system than the same data file with missing values (one of this weeks challenges).

The down-side though, is the data imputation task is a large reserach topic in and of itself, and can become quite a challenge. For the data scientist who has to deal with missing values, the imputation process presents a large hurdle that must be cleared before any attempt at machine learning can even start. The benefit of the NIML system is that data impuation is not a required step. You can send your missing-values data through NIML and see how the results look. Then you can decide whether or not data imputation would be helpful. With NIML you even have the option to do a partial-imputation (impute one field, leave other fields alone). NIML will still run the data.

Step 2 - Baseline (no missing values)As a second step in this challenge, let's just establish a baseline for the wine dataset when there are no missing features. We will create an encoder and a model, go through the learning process, and then evaluate the trained system.

# The base encoder - the one used above had parameters optimized for showing the # "missing values" encoding. my_encoder = encoder.Encoder(set_bits=15, sparsity=.1, field_types= ["N"] *nf, # all features are numeric cyclic_flags=[False]*nf, # none of the fields are cyclic spans= [ 0] *nf, # use simple/basic encoding bit-patterns cat_overlaps=[ 0] *nf, # N/A as data is numeric, not categorical. Set all features to 0 cat_values= [None] *nf, # N/A as data is numeric, not categorical. Set all features to None missing_val_ind = "?"# <------- The second of two ways to tell the encoder what a MISSING VALUE looks like , ) my_encoder.config_encoder(input_data=niml_data, label_col=0) from niml.model import model sdr_width = my_encoder.sdr_width # default sdr width for any newly created model def get_new_model(seed_val=97543, sw=sdr_width): num_neurons = 3000 new_model = model.Model( # Endoded Data parameters sdr_width=sw, # Recieved from encoding the data enc_set_bits=3, # The # of set-bits used per-feature during the encoding process (?) feat_count=4, # The Iris dataset has 4 features # NPU neurons=num_neurons, active_neurons=80, input_pct=0.8, learning=True, synapse_inc=15, synapse_dec=3, activity_reset_cnt=50, # Boosting boost_max=0.9, boost_str=2, boost_tbl_size=21, boost_tbl_step=0.01, # Classifier subclass_thresh=0.75, history_depth=1500, min_overlap=0.0, seed=seed_val, ) return new_model

train, test = train_test_split(niml_data, test_size=0.28, random_state=727) train_labels, train_isdrs, sdr_width = my_encoder.encode(input_data=train, label_col=0) test_labels, test_isdrs, sdr_width = my_encoder.encode(input_data=test, label_col=0) my_model = get_new_model() my_model.fit(labels=train_labels, isdrs=train_isdrs, epochs=8) res = my_model.evaluate(labels=test_labels, isdrs=test_isdrs) print(" Accuracy: ", res["accuracy_score"])

starting epoch 0

starting epoch 1

starting epoch 2

starting epoch 3

starting epoch 4

starting epoch 5

starting epoch 6

starting epoch 7

Accuracy: 0.96

98% accuracy...This seems like a decent starting place. Let's move on from here.

Step 3 - Missing data at InferenceLet's consider the scenario where a model has been very carefully constructed and is now running in a production environment. The model was constructed using a dataset that has NO missing values.

Out in production though, the data being presented to the system is not clean. Some observations have missing values for certain features. Can the system still make good predictions under these conditions?

Creating missing values

A small subroutine that will replace some of the true values in the dataset with missing values To be used on both our train dataset and on our test dataset

import copy def inject_missing_values(pct, dataset): # Make a copy of the dataset, overwrite PCT percentage of the values with "?" int_dataset = copy.deepcopy(dataset) num_rows = len(int_dataset) num_cols = len(int_dataset[0]) - 1 # Assumes labels are in column 0 total_values = num_rows * num_cols num_missing = int(pct * total_values) all_positions = list(range(total_values)) random.shuffle(all_positions) missing_ones = all_positions[:num_missing] for pos in sorted(missing_ones): row = int(pos / num_cols) col = pos % num_cols int_dataset[row][col+1] = "?" # again, assumes labels are in column 0 return int_dataset

To demonstrate how this function works

- let's first print out the original dataset (first 10 observations).

- generate a missing-values dataset with 30% missing values

- print out the missing-values dataset (first 10 observations)

The result is that 30% of the values are picked, at random, and those are converted into "?" as if the value were missing.

print("Original dataset:") for idx, row in enumerate(test[:10]): print(" observation #", idx, row) pm = 0.3 print("-"*50) test_missing = inject_missing_values(pm, test) print("Dataset with %2.1f%% missing values:" % (pm*100)) for idx, row in enumerate(test_missing[:10]): print(" observation #", idx, row)

Original dataset:

observation # 0 ['1', 12.43, 1.53, 2.29, 21.5, 86.0, 2.74, 3.15, 0.39, 1.77, 3.94, 0.69, 2.84, 352.0]

observation # 1 ['1', 11.64, 2.06, 2.46, 21.6, 84.0, 1.95, 1.69, 0.48, 1.35, 2.8, 1.0, 2.75, 680.0]

observation # 2 ['0', 14.38, 1.87, 2.38, 12.0, 102.0, 3.3, 3.64, 0.29, 2.96, 7.5, 1.2, 3.0, 1547.0]

observation # 3 ['2', 12.81, 2.31, 2.4, 24.0, 98.0, 1.15, 1.09, 0.27, 0.83, 5.7, 0.66, 1.36, 560.0]

observation # 4 ['0', 13.28, 1.64, 2.84, 15.5, 110.0, 2.6, 2.68, 0.34, 1.36, 4.6, 1.09, 2.78, 880.0]

observation # 5 ['1', 13.05, 3.86, 2.32, 22.5, 85.0, 1.65, 1.59, 0.61, 1.62, 4.8, 0.84, 2.01, 515.0]

observation # 6 ['1', 11.66, 1.88, 1.92, 16.0, 97.0, 1.61, 1.57, 0.34, 1.15, 3.8, 1.23, 2.14, 428.0]

observation # 7 ['2', 12.36, 3.83, 2.38, 21.0, 88.0, 2.3, 0.92, 0.5, 1.04, 7.65, 0.56, 1.58, 520.0]

observation # 8 ['2', 14.16, 2.51, 2.48, 20.0, 91.0, 1.68, 0.7, 0.44, 1.24, 9.7, 0.62, 1.71, 660.0]

observation # 9 ['2', 12.51, 1.24, 2.25, 17.5, 85.0, 2.0, 0.58, 0.6, 1.25, 5.45, 0.75, 1.51, 650.0]

--------------------------------------------------

Dataset with 30.0% missing values:

observation # 0 ['1', 12.43, 1.53, '?', 21.5, 86.0, 2.74, 3.15, 0.39, 1.77, '?', 0.69, 2.84, '?']

observation # 1 ['1', 11.64, 2.06, 2.46, '?', '?', 1.95, 1.69, 0.48, 1.35, 2.8, 1.0, 2.75, 680.0]

observation # 2 ['0', 14.38, 1.87, 2.38, '?', 102.0, '?', 3.64, '?', 2.96, 7.5, 1.2, 3.0, 1547.0]

observation # 3 ['2', '?', '?', 2.4, 24.0, '?', 1.15, 1.09, 0.27, '?', 5.7, '?', 1.36, 560.0]

observation # 4 ['0', 13.28, 1.64, 2.84, 15.5, 110.0, '?', 2.68, '?', 1.36, 4.6, 1.09, '?', 880.0]

observation # 5 ['1', 13.05, '?', 2.32, 22.5, '?', 1.65, '?', 0.61, 1.62, '?', '?', 2.01, 515.0]

observation # 6 ['1', 11.66, '?', '?', '?', '?', '?', 1.57, 0.34, 1.15, 3.8, '?', 2.14, 428.0]

observation # 7 ['2', 12.36, 3.83, 2.38, '?', '?', 2.3, '?', 0.5, 1.04, 7.65, 0.56, 1.58, 520.0]

observation # 8 ['2', 14.16, '?', 2.48, 20.0, 91.0, 1.68, '?', 0.44, 1.24, 9.7, 0.62, '?', 660.0]

observation # 9 ['2', '?', 1.24, 2.25, 17.5, 85.0, '?', '?', 0.6, 1.25, 5.45, 0.75, '?', 650.0]

Now lets take the baseline model created above, and run a missing-values dataset through it. In fact, we will run multiple missing-values datasets through with different settings for how much data is missing at each step. We will then plot the accuracy of the system as it makes predictions against a set of missing-values observations.

random.seed(19) # for repeatable results when inject_missing_values() runs x_pct_missing = [] y_accuracy = [] for p5mark in range(21): pm = 0.05*p5mark test_missing = inject_missing_values(pm, test) test_labels, test_isdrs, sdr_width = my_encoder.encode(input_data=test_missing, label_col=0) res = my_model.evaluate(labels=test_labels, isdrs=test_isdrs) x_pct_missing.append(pm) y_accuracy.append(res["accuracy_score"]) #print(y_accuracy)

import matplotlib.pyplot as plt plt.figure(figsize=(20,8)) plt.plot(x_pct_missing, y_accuracy) plt.scatter(x_pct_missing, y_accuracy) plt.title("INFERENCE: Percent Missing values vs. Accuracy") plt.xlabel('Percent missing values') plt.xticks([x/10 for x in list(range(11))]) plt.ylabel('Accuracy') plt.ylim(0.0,1.1) plt.yticks([x/10 for x in list(range(11))]) plt.grid() plt.show()

You will notice some points where the accuracy increases even though the missing data percentage has increased. Note that at each measurement point in the loop we are re-generating the missing values dataset:

line 6: test_missing = inject_missing_values(pm, test)

Each of these calls is unique in which specific values are over-written with a missing-value indicator. It should not be surprising that some calls randomly happen to remove higher-importance information while other calls randomly remove lower-importance information. The overall trend is what we should focus on. Repeated calls (an exercise for the reader) will each produce a unique plot. But the overall trend is consistent - the more information removed, the less accurate the system.

Step 4 - Missing data at TrainingWhat about training on a dataset with missing values? Can the NIML system still create a model that results in high-accuracy predictions at inference time?

Let's generate a graph similar to the one above. We will take measurements at 5% increments. At each measurement point we will:

- Overwrite some of the training dataset with missing-values

- Create a model

- Train the model on a missing-values dataset

- Evaluate the model on the test dataset

We will then plot the accuracy of the trained model verses the amount of data overwritten by missing-values.

%%capture ##################### # Note - this block can take ~3 minutes to complete import time start = time.time() train, test = train_test_split(niml_data, test_size=0.28, random_state=123) y_pooler_res = [] x_pct_missing = [] for p5mark in range(15): pm = 0.05*p5mark x_pct_missing.append(pm) train = inject_missing_values(pm, train) train_labels, train_isdrs, sdr_width = my_encoder.encode(input_data=train, label_col=0) test_labels, test_isdrs, sdr_width = my_encoder.encode(input_data=test, label_col=0) my_model = get_new_model() my_model.fit(labels=train_labels, isdrs=train_isdrs, epochs=8) res = my_model.evaluate(labels=test_labels, isdrs=test_isdrs) y_pooler_res.append(res["accuracy_score"]) end=time.time()

import matplotlib.pyplot as plt plt.figure(figsize=(20,8)) plt.plot(x_pct_missing, y_pooler_res) plt.scatter(x_pct_missing, y_pooler_res) plt.title("percent missing vs. accuracy") plt.xlabel('Pct missing') plt.xticks([x/10 for x in list(range(11))]) plt.ylabel('Accuracy') plt.ylim(0.0,1.1) plt.yticks([x/10 for x in list(range(11))]) plt.grid() plt.show()

From this graph one can see the system does not show the same robustnes to missing data when compared to Step 3 - missing data at Inference. Still - the system is able to train to within 10% accuracy with up to 25% of the data missing.

It is possible the hyperparameters of the system could be adjusted to get even better performance. This is an area worthy of exploration.

ConclusionsThe "missing data" problem does not exist in all datasets. But when it does exist, it can create a sizeable barrier to machine learning. There are techniques to deal with missing data, but the fact that there are multiple imputation strategies bolsters the claim that there is no single "best" imputation solution that can be applied universally.

The NIML system offers possibilities that simply do not exist in traditional ML systems: - Don't impute your data. Generate a baseline using missing-values data and then decide whether to impute. - Don't impute ALL of your data. Choose which features to impute, and leave other fields alone. - Train a system on "clean data", but then allow for "dirty data" at runtime, after adequate testing and building confidence the system will still be able to perform to an acceptable level.

A reminder of the specific challenges propsed at the top of this document:

- Modify your own dataset by injecting missing values and see how NIML responds

- Take a dataset with missing values and impute. Compare/contrast how NIML behaves on the missing-value dataset verses the imputed dataset

- Take a missing-values dataset and run it through NIML and also through another ML system and compare/contrast the results from the two systems

Feel free to dig into any other facets that interest you!